GNU Astronomy Utilities - Tasks: task #15637, Match RA and Dec catalog to X and...

GNU Astronomy Utilities - Tasks: task #15637, Match RA and Dec catalog to X and...

You are not allowed to post comments on this tracker with your current authentication level.

task #15637: Match RA and Dec catalog to X and Y catalog to find WCS

| Submitter: | Mohammad Akhlaghi <makhlaghi> | ||

| Submitted: | Tue 12 May 2020 01:15:22 AM UTC | ||

| Should Start On: | Mon 11 May 2020 11:00:00 PM UTC | Should be Finished on: | Mon 11 May 2020 11:00:00 PM UTC |

| Category: | Astrometry | Priority: | 5 - Normal |

| Item Group: | Enhancement | Status: | Done |

| Privacy: | Public | Assigned to: | ndanzanello |

| Percent Complete: | 100% | Open/Closed: | Closed |

| Effort: | 0.00 | ||

![]() Jump to the original submission

Jump to the original submission

|

Thu 24 Mar 2022 08:50:45 AM UTC, comment #12: |

Mohammad Akhlaghi <makhlaghi> |

|

Mon 23 Aug 2021 12:56:45 AM UTC, comment #11: This got solved here.

|

Natáli Anzanello <ndanzanello> |

|

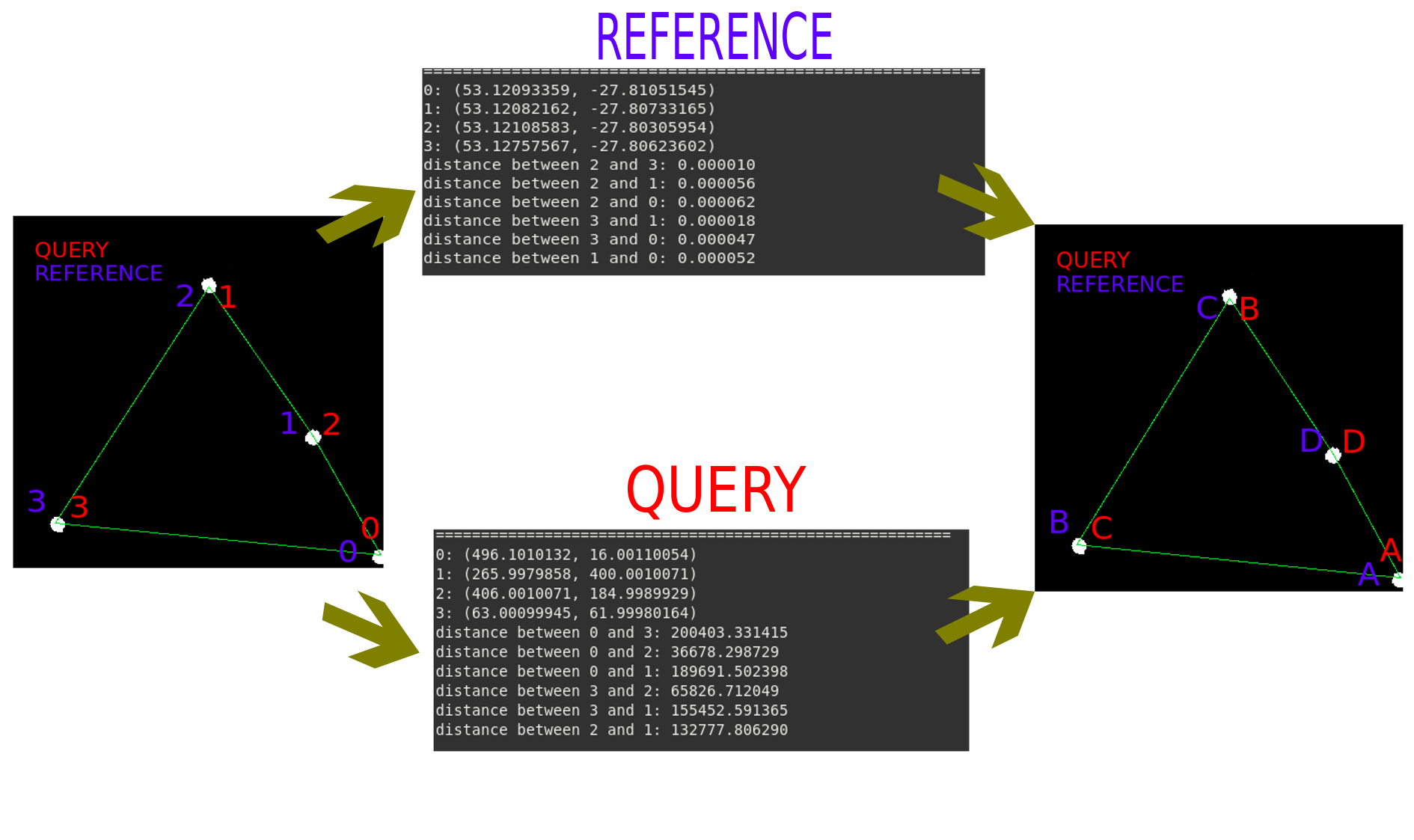

Sun 20 Jun 2021 04:43:10 PM UTC, comment #10: It's not 'vertices_after_match_fixed.png'. It's '4stars_vertices_distances.png'. Sorry for that :)

|

Natáli Anzanello <ndanzanello> |

|

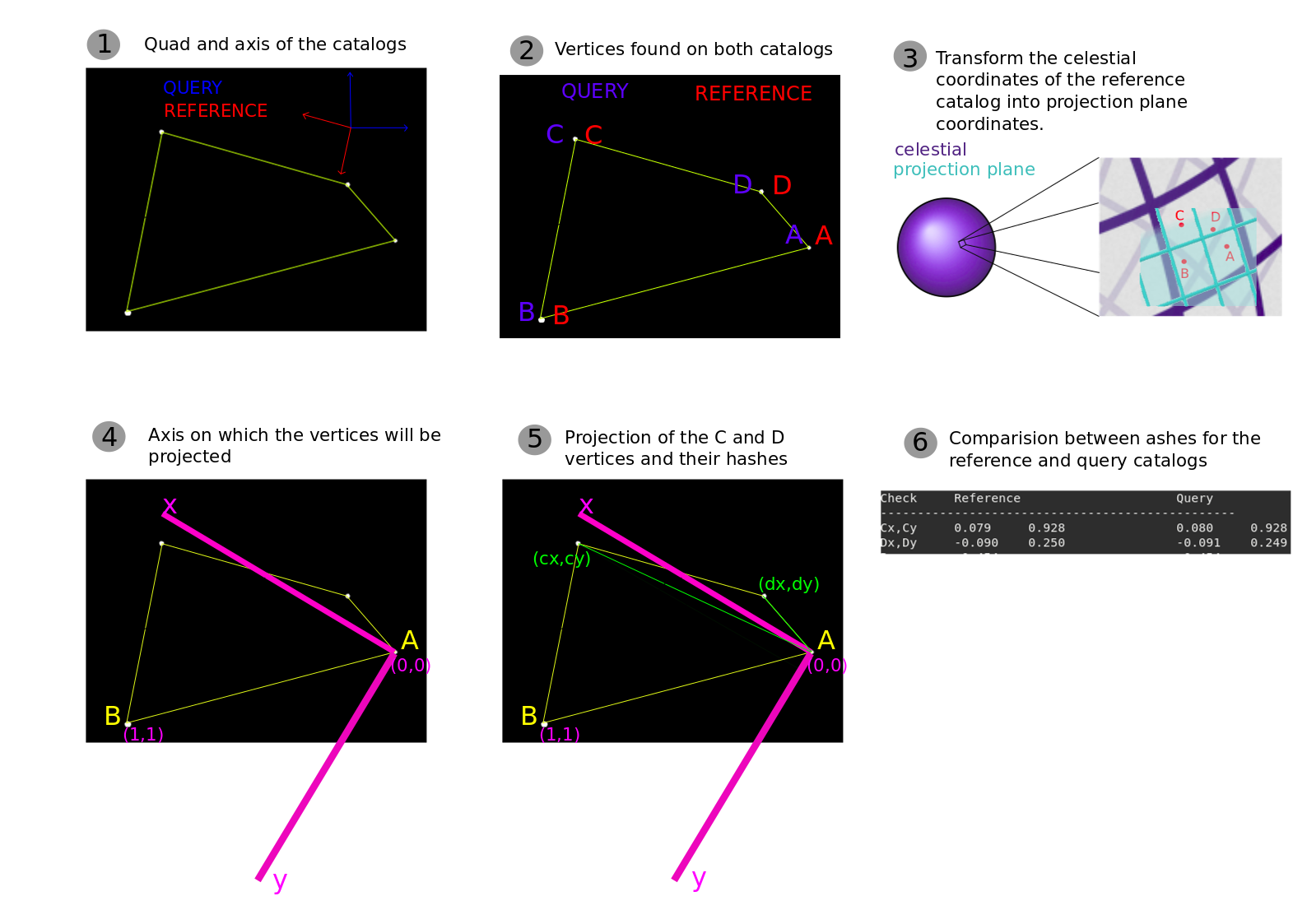

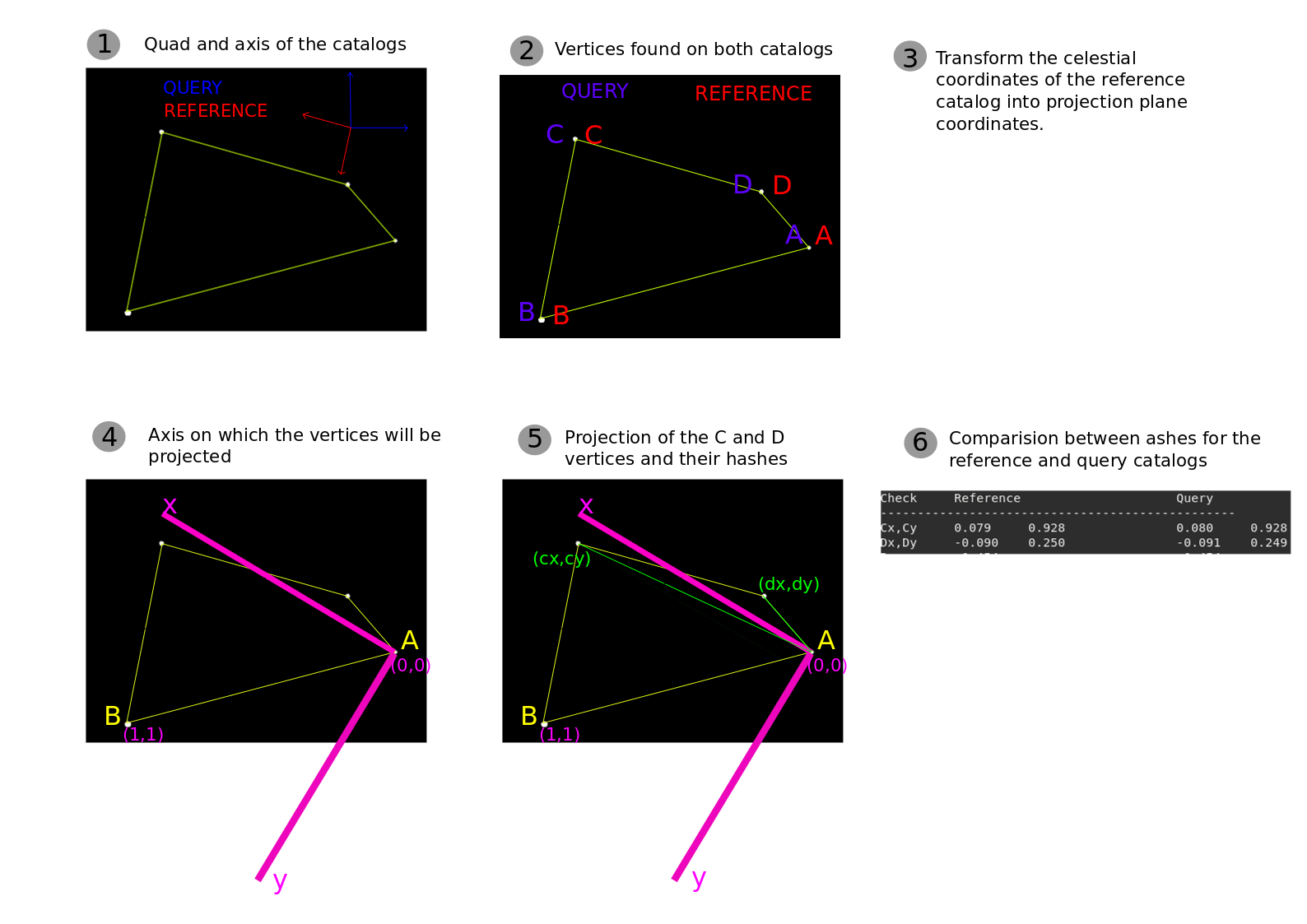

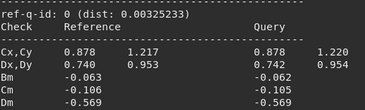

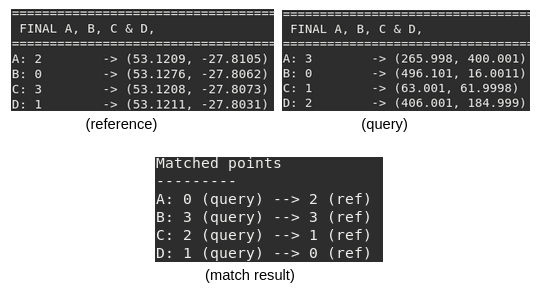

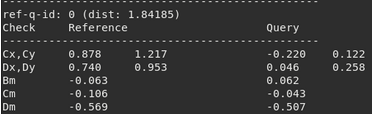

Sun 20 Jun 2021 04:19:43 PM UTC, comment #9: I think that the matching problem has been solved. Below I explain the steps:

|

Natáli Anzanello <ndanzanello> |

|

Thu 17 Jun 2021 01:51:17 PM UTC, comment #8: Continuing on the task of finding the matches, two small fixes were made on this fork: https://codeberg.org/ndanzanello/gnuastro/src/branch/astrometry

|

Natáli Anzanello <ndanzanello> |

|

Mon 20 Jul 2020 01:41:46 PM UTC, comment #7: During the rough outline mentioned before we found out that healpixs are only necessary to ensure a homogenous sampling of quads across the field. That is why we decided to ignore the healpix library for now to avoid an extra dependency.

|

Mohammad Akhlaghi <makhlaghi> |

|

Sun 19 Jul 2020 10:20:47 PM UTC, comment #6: During our discussion today, we came up with a rough outline of the steps that I am attaching as a simple plain-text file here for the record. |

Mohammad Akhlaghi <makhlaghi> |

|

Wed 17 Jun 2020 03:56:49 AM UTC, comment #5: Wonderful review! Thanks Sachin ;-).

|

Mohammad Akhlaghi <makhlaghi> |

|

Tue 16 Jun 2020 10:56:19 PM UTC, comment #4: I read the paper introducing astrometry.net and also went quickly through its repository on github. One thing that really bugged me was their documentation. It is not complete and whatever is written in more or less subtle. Anyway, the paper introduced the idea fairly well, though it was more theoretical oriented than software-oriented. Here are the basic steps that it employs for matching and wcs calculations:

|

Sachin Kumar Singh <sks_15> |

|

Sun 17 May 2020 02:44:37 AM UTC, comment #3: |

Mohammad Akhlaghi <makhlaghi> |

|

Tue 12 May 2020 09:32:12 AM UTC, comment #2: I forgot to mention that the data below are taken by the Iran National Observatory (INO) Lens Array (INOLA). In particular I am very grateful to Hamed Altafi who took the pictures and shared them for us to test/play with. The results will be applicable to any instrument. |

Mohammad Akhlaghi <makhlaghi> |

|

Tue 12 May 2020 02:21:44 AM UTC, comment #1: To help in completing this task, I just uploaded some data to play with. A link to each uploaded file is available at the bottom of this comment.

|

Mohammad Akhlaghi <makhlaghi> |

|

Tue 12 May 2020 01:15:22 AM UTC, original submission:

Let's assume that `reference-ra-dec.fits' is a single catalog that contains the RA and Dec many sources from a reference source, for example from the Gaia Archive.

|

Mohammad Akhlaghi <makhlaghi> |

Depends on the following items: None found

Items that depend on this one: None found

There are 0 votes so far. Votes easily highlight which items people would like to see resolved in priority, independently of the priority of the item set by tracker managers.

Follow 19 latest changes.

| Date | Changed by | Updated Field | Previous Value | => | Replaced by |

|---|---|---|---|---|---|

| 2022-03-24 | makhlaghi | Open/Closed | Open | Closed | |

| Discussion Lock | Locked | None | |||

| 2021-08-23 | ndanzanello | Discussion Lock | None | Locked | |

| 2021-08-23 | ndanzanello | Status | In Progress | Done | |

| 2021-08-23 | ndanzanello | Percent Complete | 50% | 100% | |

| 2021-08-23 | ndanzanello | Attached File | - | Added match_overview_complete.png, #51805 | |

| 2021-06-20 | ndanzanello | Attached File | - | Added 4stars_vertices_distances_fixed.png, #51591 | |

| 2021-06-20 | ndanzanello | Attached File | #51589 | Removed | |

| 2021-06-20 | ndanzanello | Attached File | - | Added vertices_after_match_fixed.png, #51589 | |

| Attached File | - | Added match_overview.png, #51590 | |||

| 2021-06-17 | ndanzanello | Attached File | - | Added 4stars_vertices_distances.png, #51572 | |

| Attached File | - | Added new_dist_4stars.png, #51573 | |||

| Attached File | - | Added vertices_after_match.png, #51574 | |||

| Attached File | - | Added old_dist_4stars.png, #51575 | |||

| 2021-06-16 | makhlaghi | Category | Match | Astrometry | |

| 2021-06-16 | makhlaghi | Assigned to | sks_15 | ndanzanello | |

| 2020-07-19 | makhlaghi | Attached File | - | Added rough-outline.txt, #49513 | |

| Percent Complete | 0% | 50% | |||

| 2020-05-12 | makhlaghi | Carbon-Copy | - | Added -email is unavailable- |

Powered by Savane 3.13-4448.

Corresponding source code

Since Natali's project on finding the linear WCS translation parameters has been completed, I am closing this task.

task #16136 has been defined for finding the distortions in images.